Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Perception of Ultrasonic Friction Pulses

The demand for natural haptic feedback on touch screens such as smartphones and car control panels has been rapidly growing in recent years. One such feedback technology, vibrotactile stimulation, is already incorporated into many consumer devices but provides only a general vibration sensation to the user's fingers and does not work well on mechanically grounded screens.

Friction-based tactile feedback solutions have recently been demonstrated as a good alternative actuation approach. The development of these novel interfaces has raised interest in touch-based human-machine interactions while also highlighting the need for appropriate high-fidelity strategies for tactile rendering. Such new approaches face the limitation that little is known about the sensory mechanisms that mediate human perception of frictional cues.

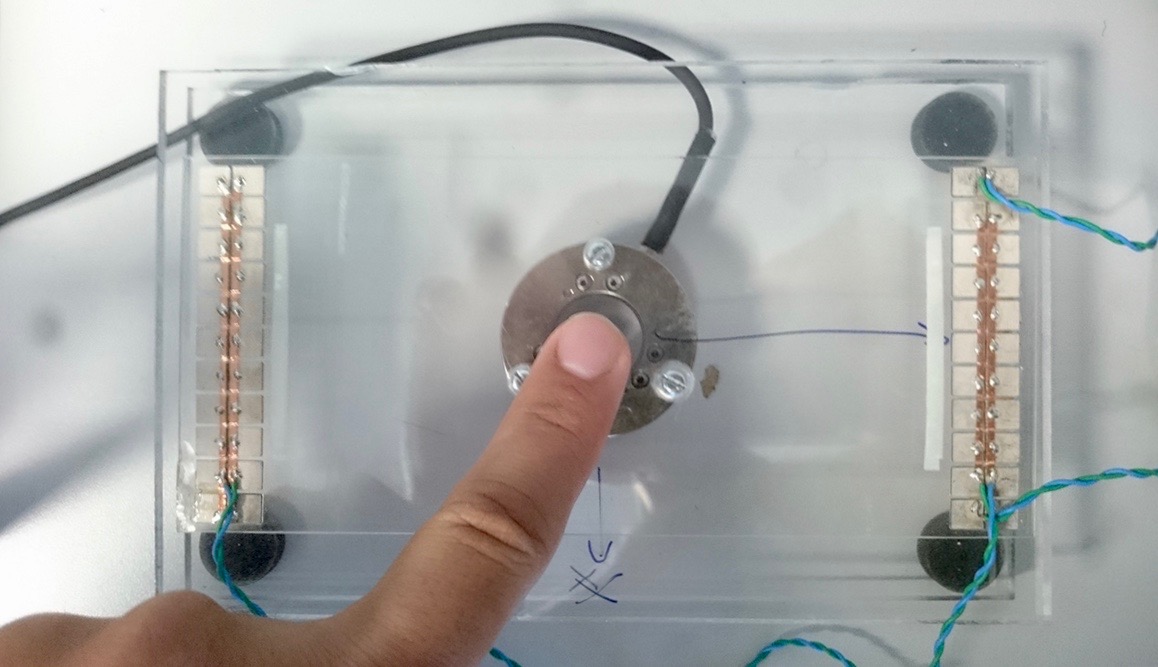

As shown in the figure, virtual geometric features and complex textures (e.g., buttons, edges, patterns, fabrics) can be rendered on flat screens via ultrasonic vibrations that modulate the friction force experienced by the user. However, optimizing these tactile sensations requires one to understand which components of the frictional signal are critical and how the intensity of each component should be scaled according to the dynamics of the interaction.

In this project, we investigate how ultrasonic vibration waveforms with different durations and slopes are perceived by humans, as well as what kind of high-level percepts (such as edges, bumps and holes) they generate. To that end, we physically measure the frictional patterns that the finger experiences during ultrasonic stimulation, and we then correlate these results with the verbal reports of the users.

The long-term goals of this project are to understand how to generate tactile features that are perceived unambiguously by users and to leverage this knowledge to guide the design of friction-based tactile stimuli on future tactile displays that provide haptic feedback.

Members

Publications