Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

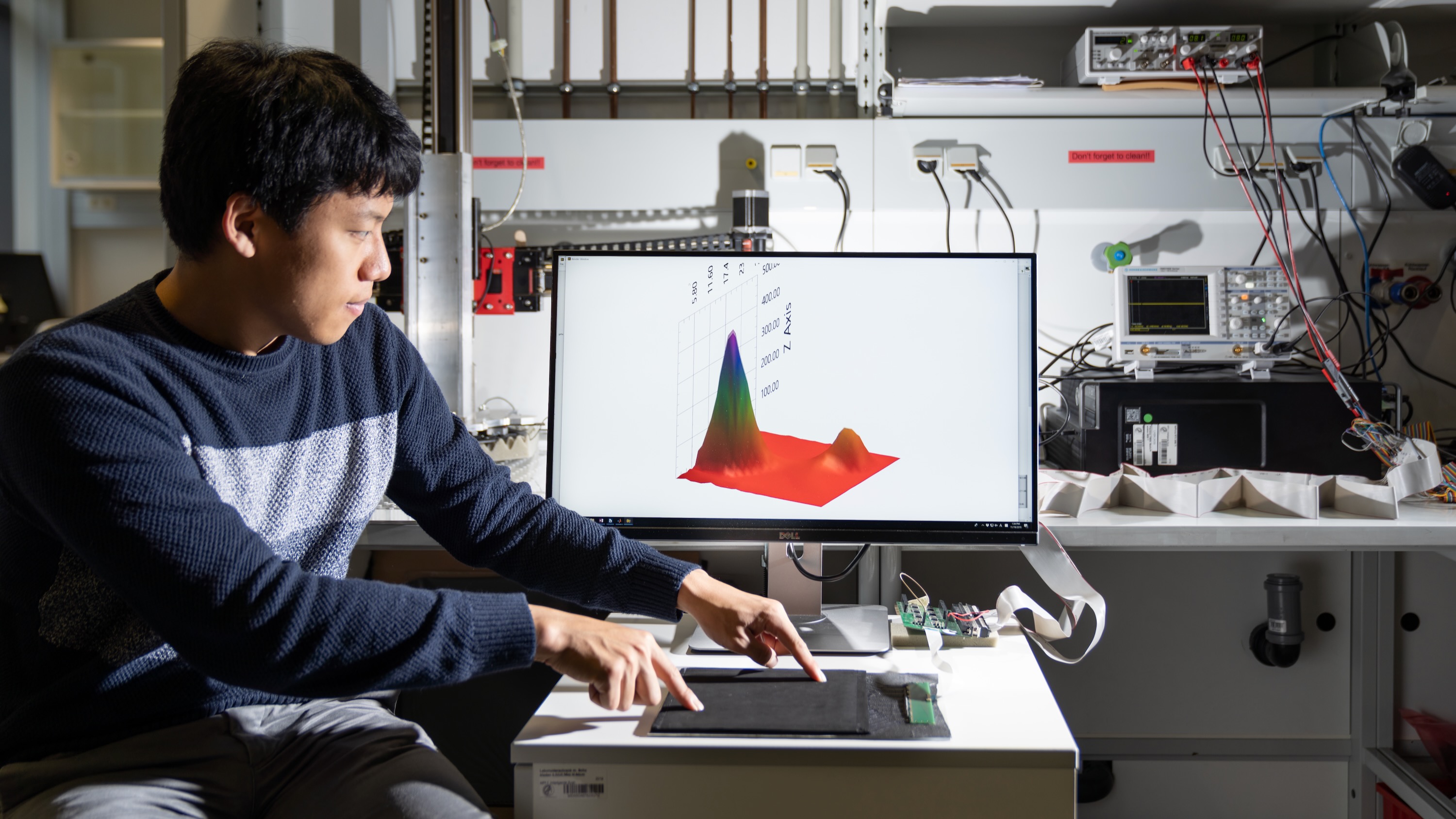

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Artificial Touch Sensing and Processing

Humans and animals possess sophisticated skin that not only protects the body but is also exquisitely sensitive to physical contact. In contrast, most robots have no tactile sensing, since commercial touch sensors tend to be expensive, difficult to integrate into real robots, and rather limited in size, robustness, sensitivity, bandwidth, and/or reliability. Nonetheless, the emerging consensus is that robots working in unstructured environments can greatly benefit from touch information.

How can we give robots an effective sense of touch? The Haptic Intelligence Department pursues a range of approaches to create practical tactile sensors for robotics. We particularly work to create robust tactile sensors that can be easily manufactured and provide useful contact information across a broad bandwidth. Some of our past projects in this domain focused on covering the large surfaces of a robot's body with soft sensors that detect normal force. Other projects aim to deliver much finer tactile sensation on regions like the fingertips.

Our ongoing projects are exploring resistive, capacitive, visual, and auditory methods for transducing touch into electrical signals that can be acquired by a robotic system. Our approaches often take inspiration from nature, such as the structure of the human fingertip or the shape and material properties of an elephant whisker. Since the relationship between tactile contact and sensor output is usually complex and difficult to model, we frequently employ machine learning to interpret such measurements in real time. Some of these sensing-focused investigations are also inspired by our research in human-robot interaction.

Another important thrust of HI research is investigating ways of analyzing the rich time-series data from existing and new tactile sensors. Here, a human experimenter or professional typically wields the tool, and our measurement system records multimodal data from the physical interaction taking place. We then create a processing pipeline that can achieve the task at hand, whether that is recognizing a surface from a large library of past experiences or identifying the feel of a puncture through a particular type of tissue as it occurs. These software-focused aspects fall under the umbrella of artificial touch processing, complementing our hardware work on artificial tactile sensing.