Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

Haptipedia

Creating haptic experiences often entails inventing, modifying, or selecting specialized hardware. However, experience designers are rarely engineers, and 30 years of haptic inventions are buried in the fragmented literature that describes devices mechanically rather than by potential purpose.

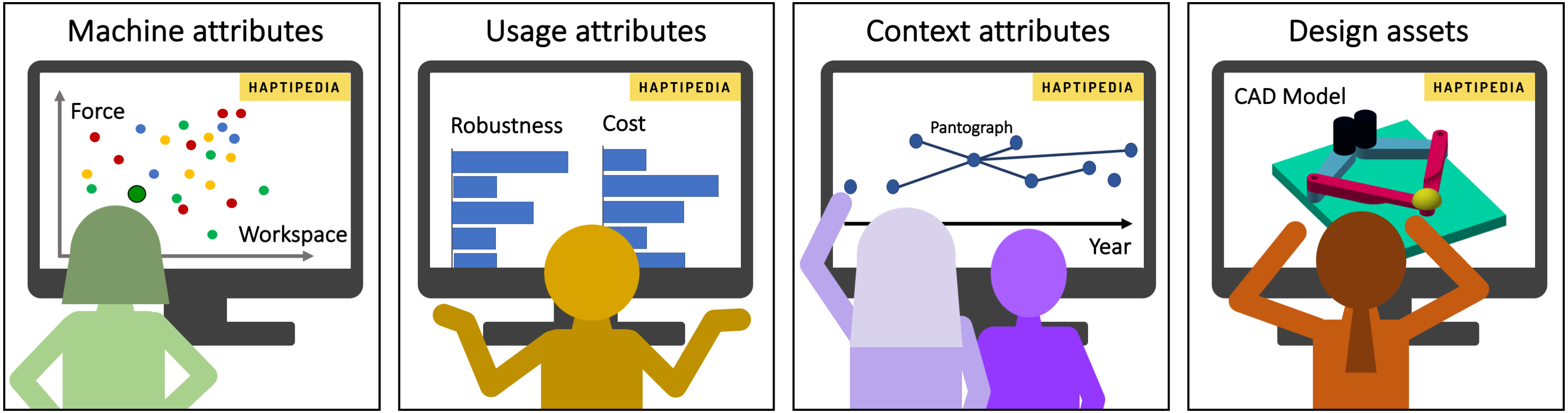

We conceived of Haptipedia to unlock this trove of examples: Haptipedia provides a practical taxonomy, database, and visualization to efficiently navigate this fragmented corpus based on metadata that matters to both device and experience designers []. Through it, both device and experience designers can search and browse a database of 105 grounded force-feedback devices with an online visualization, examine their design trade-offs, and repurpose them into novel devices and interactions.

To design Haptipedia, we asked: what taxonomy of attributes can best delineate haptic devices for both device and experience designers? What subset of engineering attributes is most informative? Which other attributes, missing from the literature, do users care about? Which missing attributes can be estimated by an expert device designer?

Haptipedia's design was driven by both systematic review of the haptic device literature and rich input from diverse haptic designers. We focused on grounded force-feedback (GFF) devices, as the earliest subset of haptic technology with rich device variation and considerable maturity in both research and commercial settings. From simple haptic knobs to robotic arms with a dozen degrees of freedom, GFF devices typically measure the user's motion and output force and/or torque in response. We iteratively developed Haptipedia by screening 2812 haptics publications, selecting 105 papers that described a haptic device, extracting device attributes from device documentations, building a GFF taxonomy, database, and visualization, and evaluating them with users. Device and experience designers provided input on three major iterations of Haptipedia's three components (taxonomy, database, and visualization) during haptics conference demonstrations [], focus group sessions, and in-depth individual interviews.

We show that 1) the Haptipedia taxonomy provides a framework and lexicon for describing various aspects of GFF devices, 2) our taxonomy and visualization let users assess device trade-offs and overall hands-on feel, and select relevant devices from a large corpus, and 3) our process can inform design of future platforms for other technology subsets (e.g., 3D fabrication technology or head-mounted displays).

In our ongoing work on this project, we plan to evaluate salient features and patterns in the current dataset, investigate ways of crowdsourcing the entry and validation of data for new haptic devices, create Haptipedias for other types of haptic interfaces, and assess Haptipedia's utility and impact in haptics and the larger designer community over time.

You can interact with the Haptipedia visualization at http://haptipedia.org/

Video

Members

Publications