Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

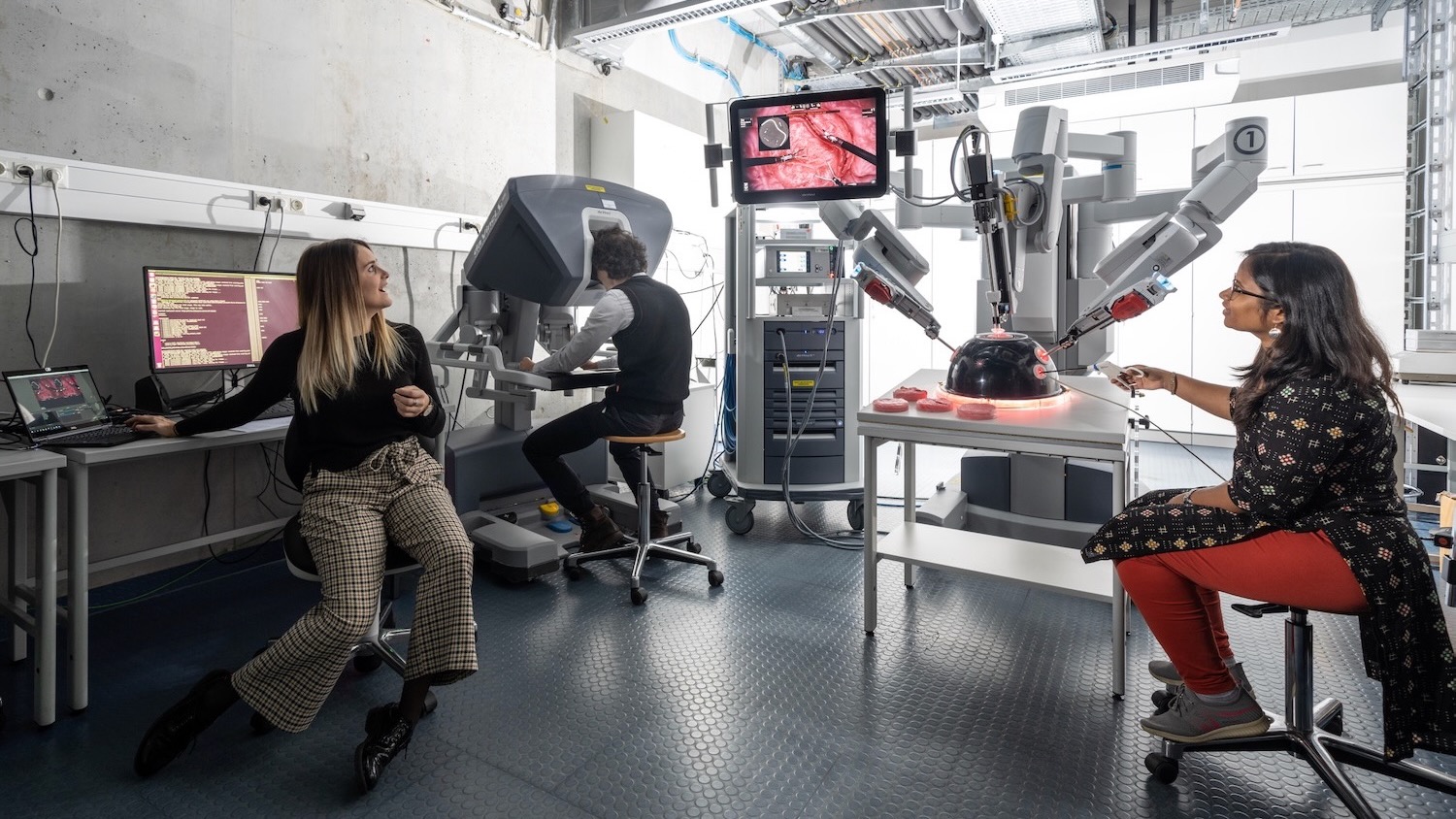

Immersive Teleoperation

Commonly used in minimally invasive robotic surgery and hazardous material handling, telerobotic systems empower humans to manipulate items by remotely controlling a robot. The user continually sends movement commands and receives feedback via the teleoperation interface, which is where we focus our attention. How can telerobotic systems support the operator to perform tasks with outcomes that are as good as (or even better than) those accomplished via direct manipulation? We work to create new ways to capture operator input, deliver haptic feedback, support perception of the remote environment, and otherwise augment the operator's abilities, and we systematically study how these technologies affect the operator. We are particularly interested in multimodal feedback that blends visual, auditory, and/or haptic cues to make the experience of controlling a remote robot more immersive.

Our previous work on visual augmented reality for robotic surgery led us to explore the potential for improving what the surgeon sees during procedures. The present widespread imaging paradigm centers on a stereo endoscope (two offset RGB images) with collocated illumination, which makes depth perception challenging. We thus started exploring other imaging modalities, additional viewpoints, new camera designs, and computational tools for 3D reconstruction in minimally invasive surgery.

Remotely accomplishing complex tasks such as surgical suturing benefits from a rich bidirectional mechanical interaction. Because force sensors are costly, delicate, and difficult to integrate, and because the addition of force feedback tends to drive bilateral teleoperators unstable, most such systems include no haptic cues; the operator has to learn to rely on what he or she can see. However, estimating haptic interactions through vision incurs high cognitive load, especially for beginners. Thus, we work on inventing and refining clever ways of stably providing haptic feedback during teleoperation, often by focusing on tactile rather than kinesthetic cues.

A main thrust of our earlier work centered on naturalistic vibrotactile feedback of the robot's contact vibrations, as this approach is both simple and highly effective. We have studied how this form of vibrotactile feedback and other haptic cues affect learners, aiming to accelerate learning. As highlighted on a subsequent page, we have also adapted naturalistic vibrotactile feedback to the control of large-scale construction machines, and we created a general optimization-based algorithm for teleoperation of a wide variety of robots and construction machines.