Human Pose, Shape and Action

3D Pose from Images

2D Pose from Images

Beyond Motion Capture

Action and Behavior

Body Perception

Body Applications

Pose and Motion Priors

Clothing Models (2011-2015)

Reflectance Filtering

Learning on Manifolds

Markerless Animal Motion Capture

Multi-Camera Capture

2D Pose from Optical Flow

Body Perception

Neural Prosthetics and Decoding

Part-based Body Models

Intrinsic Depth

Lie Bodies

Layers, Time and Segmentation

Understanding Action Recognition (JHMDB)

Intrinsic Video

Intrinsic Images

Action Recognition with Tracking

Neural Control of Grasping

Flowing Puppets

Faces

Deformable Structures

Model-based Anthropometry

Modeling 3D Human Breathing

Optical flow in the LGN

FlowCap

Smooth Loops from Unconstrained Video

PCA Flow

Efficient and Scalable Inference

Motion Blur in Layers

Facade Segmentation

Smooth Metric Learning

Robust PCA

3D Recognition

Object Detection

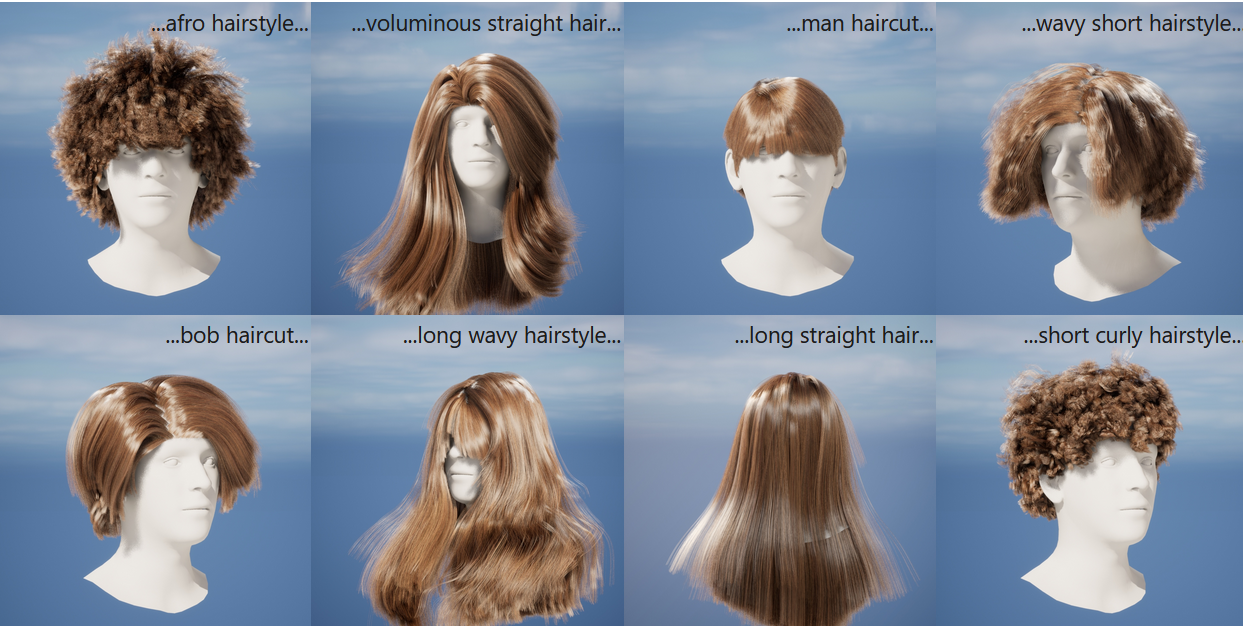

Text-Conditioned Generative Model of 3D Strand-based Human Hairstyles

Members

Publications