Capturing and Animation of Body and Clothing from Monocular Video

project code pdf

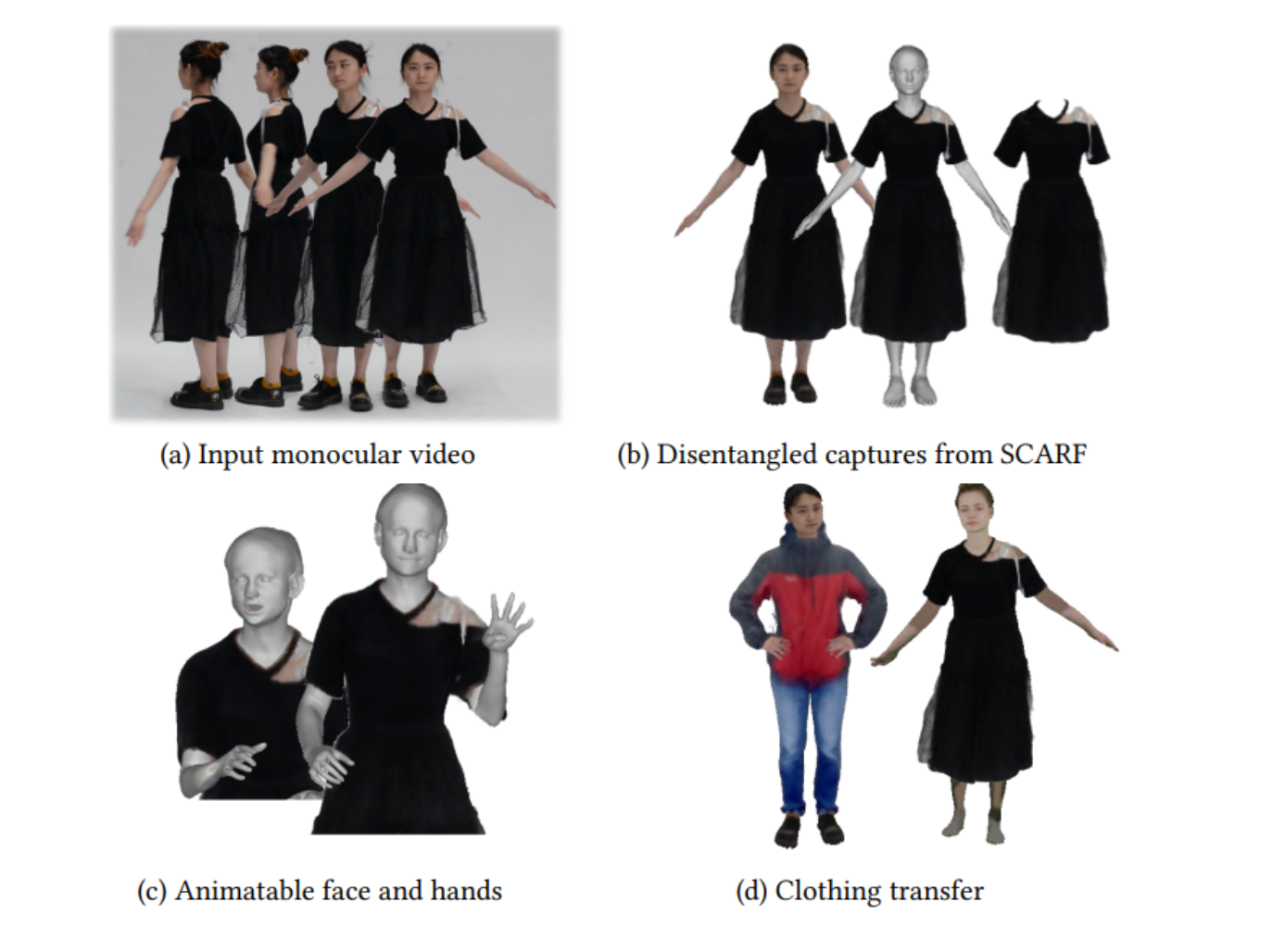

We propose SCARF (Segmented Clothed Avatar Radiance Field), a hybrid model combining a mesh-based body with a neural radiance field. Integrating the mesh into the volumetric rendering in combination with a differentiable rasterizer enables us to optimize SCARF directly from monocular videos, without any 3D supervision. The hybrid modeling enables SCARF to (i) animate the clothed body avatar by changing body poses (including hand articulation and facial expressions), (ii) synthesize novel views of the avatar, and (iii) transfer clothing between avatars in virtual try-on applications. We demonstrate that SCARF reconstructs clothing with higher visual quality than existing methods, that the clothing deforms with changing body pose and body shape, and that clothing can be successfully transferred between avatars of different subjects.

| Author(s): | Feng, Yao and Yang, Jinlong and Pollefeys, Marc and Black, Michael J. and Bolkart, Timo |

| Links: | |

| Book Title: | Proceedings SIGGRAPH Asia 2022 Conference Papers Proceedings (SA ’22 2022) |

| Year: | 2022 |

| Month: | December |

| Bibtex Type: | Conference Paper (inproceedings) |

| DOI: | 10.1145/3550469.3555423 |

| Event Name: | SIGGRAPH Asia 2022 Conference Papers Proceedings (SA’22) |

| Event Place: | Daegu, Republic of Korea |

| State: | Published |

| URL: | https://yfeng95.github.io/scarf/ |

| Article Number: | 45 |

| Electronic Archiving: | grant_archive |

BibTex

@inproceedings{Feng2022scarf,

title = {Capturing and Animation of Body and Clothing from Monocular Video},

booktitle = {Proceedings SIGGRAPH Asia 2022 Conference Papers Proceedings (SA '22 2022) },

abstract = {We propose SCARF (Segmented Clothed Avatar Radiance Field), a hybrid model combining a mesh-based body with a neural radiance field. Integrating the mesh into the volumetric rendering in combination with a differentiable rasterizer enables us to optimize SCARF directly from monocular videos, without any 3D supervision. The hybrid modeling enables SCARF to (i) animate the clothed body avatar by changing body poses (including hand articulation and facial expressions), (ii) synthesize novel views of the avatar, and (iii) transfer clothing between avatars in virtual try-on applications. We demonstrate that SCARF reconstructs clothing with higher visual quality than existing methods, that the clothing deforms with changing body pose and body shape, and that clothing can be successfully transferred between avatars of different subjects.},

month = dec,

year = {2022},

slug = {scarf-ac995f96-7046-4145-9d80-6cf84bc1d53c},

author = {Feng, Yao and Yang, Jinlong and Pollefeys, Marc and Black, Michael J. and Bolkart, Timo},

url = {https://yfeng95.github.io/scarf/},

month_numeric = {12}

}