Adaptive Locomotion of Soft Microrobots

Networked Control and Communication

Controller Learning using Bayesian Optimization

Event-based Wireless Control of Cyber-physical Systems

Model-based Reinforcement Learning for PID Control

Learning Probabilistic Dynamics Models

Gaussian Filtering as Variational Inference

Enhancing HRI through Responsive Multimodal Feedback

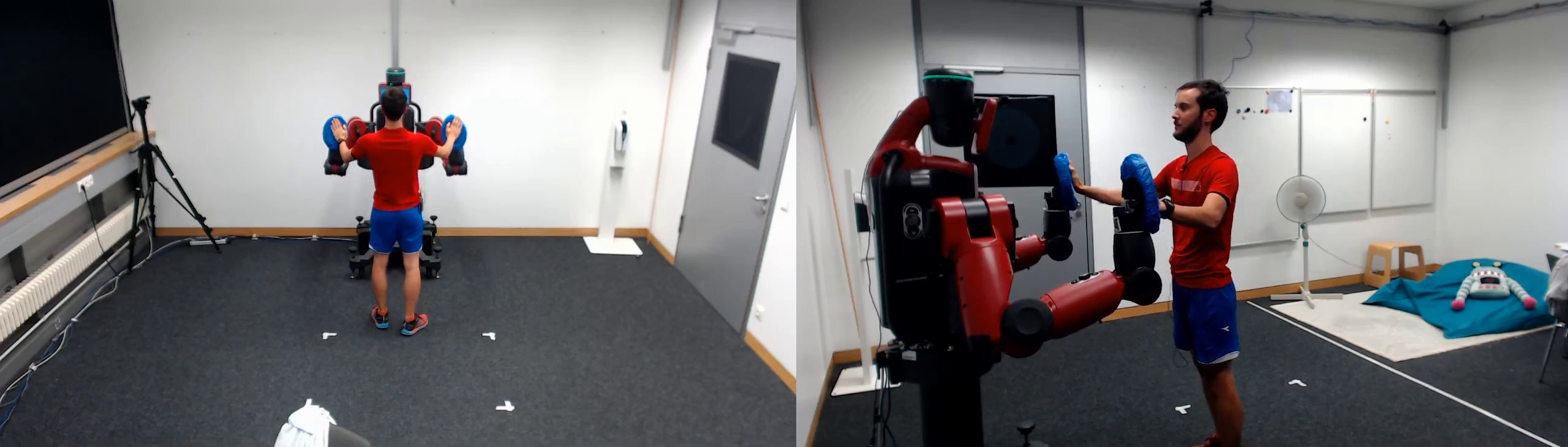

We investigate how multi-modal feedback-gestures, facial expressions, and sounds can promote physical activity and enhance task comprehension by exploring how social-physical robots can create engaging, movement-based interactions.

To study such interactions, we developed eight exercise games for the Rethink Robotics Baxter Research Robot that examine how people respond to social-physical interactions with a humanoid robot. These games were developed with the input and guidance of experts in game design, therapy, and rehabilitation [], and validated via extensive pilot testing [

]. Results from our game evaluation study with 20 younger and 20 older adults show that bimanual humanoid robots could motivate users to stay healthy via social-physical exercise [

]. However, we found that these robots must have customizable behaviors and end-user monitoring capabilities to be viable in real-world scenarios.

To address the above needs, we developed the Robot Interaction Studio, a platform for enabling minimally supervised human-robot interaction []. This system combines Captury Live, a real-time markerless motion-capture system, with a ROS-compatible robot to estimate user actions in real time and provide corrective feedback [

]. We evaluated this platform via a user study where Baxter sequentially presented the user with three gesture-based cues in randomized order [

]. The study results showed that participants explored the interaction workspace, mimicked Baxter, and interacted with Baxter's hands even without instructions.

Given the importance of gestures in motor learning, we examined how nonverbal robot feedback influences human behavior []. Inspired by educational research, we tested formative feedback (real-time corrections) and summative feedback (post-task scores) across three tasks: positioning, mimicking arm poses, and contacting the robot's hands. We found that both formative and summative feedback improved user task performance. However, only formative feedback enhanced task comprehension.

These findings highlight how real-time, multi-modal interactions enable robots to encourage physical activity and improve learning, making them valuable tools for exercise and rehabilitation.

This research project involves collaborations with Naomi T. Fitter (Oregon State University) and Michelle J. Johnson (University of Pennsylvania).

Members

Publications