Probabilistic Models for 3D Urban Scene Understanding from Movable Platforms

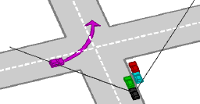

Visual 3D scene understanding is an important component in autonomous driving and robot navigation. Intelligent vehicles for example often base their decisions on observations obtained from video cameras as they are cheap and easy to employ. Inner-city intersections represent an interesting but also very challenging scenario in this context: The road layout may be very complex and observations are often noisy or even missing due to heavy occlusions. While Highway navigation and autonomous driving on simple and annotated intersections have already been demonstrated successfully, understanding and navigating general inner-city crossings with little prior knowledge remains an unsolved problem. This thesis is a contribution to understanding multi-object traffic scenes from video sequences. All data is provided by a camera system which is mounted on top of the autonomous driving platform AnnieWAY. The proposed probabilistic generative model reasons jointly about the 3D scene layout as well as the 3D location and orientation of objects in the scene. In particular, the scene topology, geometry as well as traffic activities are inferred from short video sequences. The model takes advantage of monocular information in the form of vehicle tracklets, vanishing lines and semantic labels. Additionally, the benefit of stereo features such as 3D scene flow and occupancy grids is investigated. Motivated by the impressive driving capabilities of humans, no further information such as GPS, lidar, radar or map knowledge is required. Experiments conducted on 113 representative intersection sequences show that the developed approach successfully infers the correct layout in a variety of difficult scenarios. To evaluate the importance of each feature cue, experiments with different feature combinations are conducted. Additionally, the proposed method is shown to improve object detection and object orientation estimation performance.

| Author(s): | Andreas Geiger |

| Year: | 2013 |

| Month: | April |

| Bibtex Type: | Ph.D. Thesis (phdthesis) |

| Electronic Archiving: | grant_archive |

| Institution: | Karlsruhe Institute of Technology |

| School: | Karlsruhe Institute of Technology |

| Links: | |

BibTex

@phdthesis{Geiger2013,

title = {Probabilistic Models for 3D Urban Scene Understanding from Movable Platforms},

abstract = {Visual 3D scene understanding is an important component in autonomous

driving and robot navigation. Intelligent vehicles for example often

base their decisions on observations obtained from video cameras

as they are cheap and easy to employ. Inner-city intersections represent

an interesting but also very challenging scenario in this context:

The road layout may be very complex and observations are often noisy

or even missing due to heavy occlusions. While Highway navigation

and autonomous driving on simple and annotated intersections have

already been demonstrated successfully, understanding and navigating

general inner-city crossings with little prior knowledge remains

an unsolved problem. This thesis is a contribution to understanding

multi-object traffic scenes from video sequences. All data is provided

by a camera system which is mounted on top of the autonomous driving

platform AnnieWAY. The proposed probabilistic generative model reasons

jointly about the 3D scene layout as well as the 3D location and

orientation of objects in the scene. In particular, the scene topology,

geometry as well as traffic activities are inferred from short video

sequences. The model takes advantage of monocular information in

the form of vehicle tracklets, vanishing lines and semantic labels.

Additionally, the benefit of stereo features such as 3D scene flow

and occupancy grids is investigated. Motivated by the impressive

driving capabilities of humans, no further information such as GPS,

lidar, radar or map knowledge is required. Experiments conducted

on 113 representative intersection sequences show that the developed

approach successfully infers the correct layout in a variety of difficult

scenarios. To evaluate the importance of each feature cue, experiments

with different feature combinations are conducted. Additionally,

the proposed method is shown to improve object detection and object

orientation estimation performance.},

institution = {Karlsruhe Institute of Technology},

school = {Karlsruhe Institute of Technology},

month = apr,

year = {2013},

slug = {geiger2013},

author = {Geiger, Andreas},

month_numeric = {4}

}