Learning to Estimate Palpation Forces in Robotic Surgery From Visual-Inertial Data

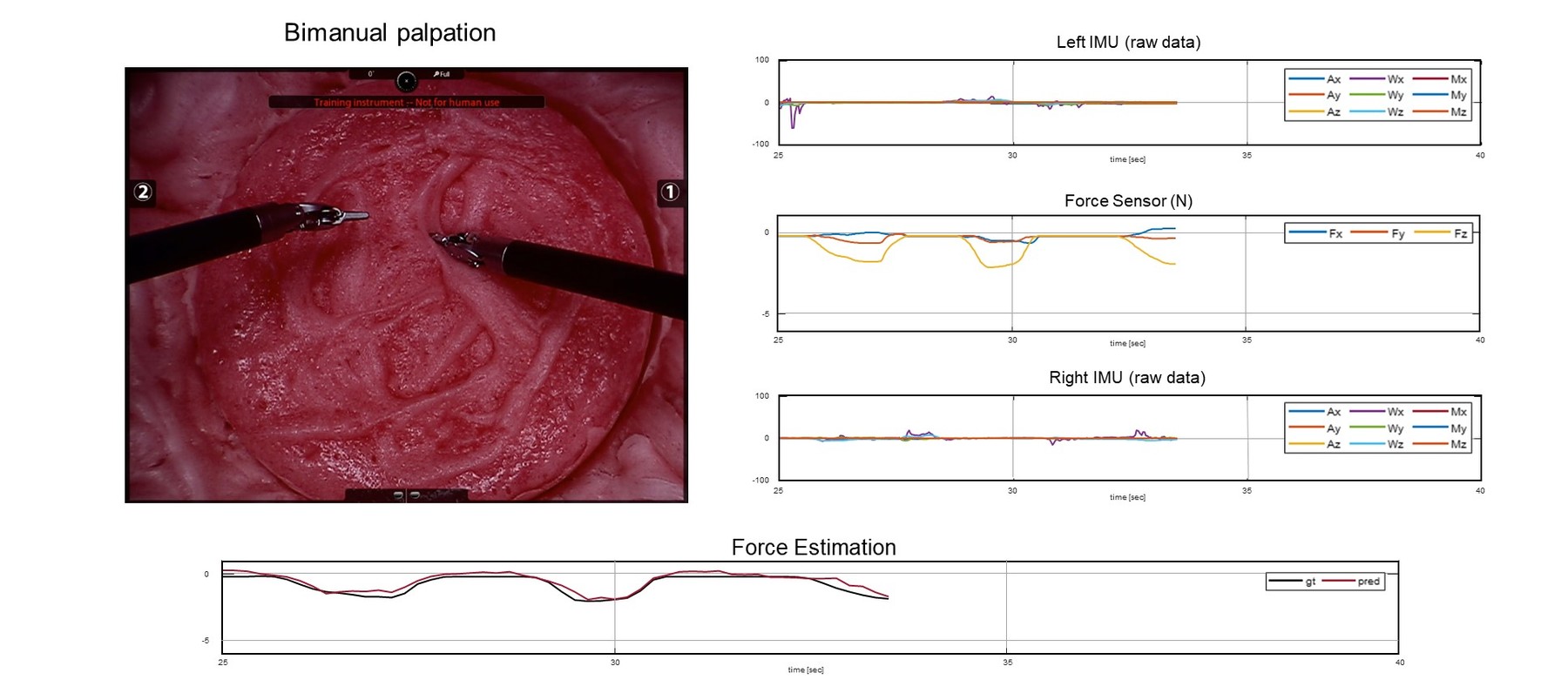

Surgeons cannot directly touch the patient's tissue in robot-assisted minimally invasive procedures. Instead, they must palpate using instruments inserted into the body through trocars. This way of operating largely prevents surgeons from using haptic cues to localize visually undetectable structures such as tumors and blood vessels, motivating research on direct and indirect force sensing. We propose an indirect force-sensing method that combines monocular images of the operating field with measurements from IMUs attached externally to the instrument shafts. Our method is thus suitable for various robotic surgery systems as well as laparoscopic surgery. We collected a new dataset using a da Vinci Si robot, a force sensor, and four different phantom tissue samples. The dataset includes 230 one-minute-long recordings of repeated bimanual palpation tasks performed by four lay operators. We evaluated several network architectures and investigated the role of the network inputs. Using the DenseNet vision model and including inertial data best-predicted palpation forces (lowest average root-mean-square error and highest average coefficient of determination). Ablation studies revealed that video frames carry significantly more information than inertial signals. Finally, we demonstrated the model's ability to generalize to unseen tissue and predict shear contact forces.

| Author(s): | Lee, Young-Eun and Mat Husin, Haliza and Forte, Maria-Paola and Lee, Seong-Whan and Kuchenbecker, Katherine J. |

| Journal: | IEEE Transactions on Medical Robotics and Bionics |

| Volume: | 5 |

| Number (issue): | 3 |

| Pages: | 496--506 |

| Year: | 2023 |

| Month: | August |

| Project(s): | |

| Bibtex Type: | Article (article) |

| DOI: | 10.1109/TMRB.2023.3295008 |

| State: | Published |

| Electronic Archiving: | grant_archive |

BibTex

@article{Lee23-TMRB-Palpation,

title = {Learning to Estimate Palpation Forces in Robotic Surgery From Visual-Inertial Data},

journal = {IEEE Transactions on Medical Robotics and Bionics},

abstract = {Surgeons cannot directly touch the patient's tissue in robot-assisted minimally invasive procedures. Instead, they must palpate using instruments inserted into the body through trocars. This way of operating largely prevents surgeons from using haptic cues to localize visually undetectable structures such as tumors and blood vessels, motivating research on direct and indirect force sensing. We propose an indirect force-sensing method that combines monocular images of the operating field with measurements from IMUs attached externally to the instrument shafts. Our method is thus suitable for various robotic surgery systems as well as laparoscopic surgery. We collected a new dataset using a da Vinci Si robot, a force sensor, and four different phantom tissue samples. The dataset includes 230 one-minute-long recordings of repeated bimanual palpation tasks performed by four lay operators. We evaluated several network architectures and investigated the role of the network inputs. Using the DenseNet vision model and including inertial data best-predicted palpation forces (lowest average root-mean-square error and highest average coefficient of determination). Ablation studies revealed that video frames carry significantly more information than inertial signals. Finally, we demonstrated the model's ability to generalize to unseen tissue and predict shear contact forces.},

volume = {5},

number = {3},

pages = {496--506},

month = aug,

year = {2023},

slug = {lee23-tmrb-palpation},

author = {Lee, Young-Eun and Mat Husin, Haliza and Forte, Maria-Paola and Lee, Seong-Whan and Kuchenbecker, Katherine J.},

month_numeric = {8}

}