2024

hi

Mohan, M., Kuchenbecker, K. J.

Demonstration: OCRA - A Kinematic Retargeting Algorithm for Expressive Whole-Arm Teleoperation

Hands-on demonstration presented at the Conference on Robot Learning (CoRL), Munich, Germany, November 2024 (misc) Accepted

al

hi

ei

Andrussow, I., Sun, H., Martius, G., Kuchenbecker, K. J.

Demonstration: Minsight - A Soft Vision-Based Tactile Sensor for Robotic Fingertips

Hands-on demonstration presented at the Conference on Robot Learning (CoRL), Munich, Germany, November 2024 (misc) Accepted

hi

Bartels, J. U., Sanchez-Tamayo, N., Sedlmair, M., Kuchenbecker, K. J.

Active Haptic Feedback for a Virtual Wrist-Anchored User Interface

Hands-on demonstration presented at the ACM Symposium on User Interface Software and Technology (UIST), Pittsburgh, USA, October 2024 (misc) Accepted

ps

Sanyal, S.

Leveraging Unpaired Data for the Creation of Controllable Digital Humans

Max Planck Institute for Intelligent Systems and Eberhard Karls Universität Tübingen, September 2024 (phdthesis) To be published

ps

Osman, A. A. A.

Realistic Digital Human Characters: Challenges, Models and Algorithms

University of Tübingen, September 2024 (phdthesis)

ei

Immer, A.

Advances in Probabilistic Methods for Deep Learning

ETH Zurich, Switzerland, September 2024, CLS PhD Program (phdthesis)

hi

Rokhmanova, N., Martus, J., Faulkner, R., Fiene, J., Kuchenbecker, K. J.

Modeling Shank Tissue Properties and Quantifying Body Composition with a Wearable Actuator-Accelerometer Set

Extended abstract (1 page) presented at the American Society of Biomechanics Annual Meeting (ASB), Madison, USA, August 2024 (misc)

hi

Schulz, A., Serhat, G., Kuchenbecker, K. J.

Adapting a High-Fidelity Simulation of Human Skin for Comparative Touch Sensing

Extended abstract (1 page) presented at the American Society of Biomechanics Annual Meeting (ASB), Madison, USA, August 2024 (misc)

hi

Gong, Y.

Engineering and Evaluating Naturalistic Vibrotactile Feedback for Telerobotic Assembly

University of Stuttgart, Stuttgart, Germany, August 2024, Faculty of Design, Production Engineering and Automotive Engineering (phdthesis)

ei

Park, J.

A Measure-Theoretic Axiomatisation of Causality and Kernel Regression

University of Tübingen, Germany, July 2024 (phdthesis)

ei

Sajjadi, S. M. M.

Enhancement and Evaluation of Deep Generative Networks with Applications in Super-Resolution and Image Generation

University of Tübingen, Germany, July 2024 (phdthesis)

ps

Taheri, O.

Modelling Dynamic 3D Human-Object Interactions: From Capture to Synthesis

University of Tübingen, July 2024 (phdthesis) To be published

hi

Lev, H. K., Sharon, Y., Geftler, A., Nisky, I.

Errors in Long-Term Robotic Surgical Training

Work-in-progress paper (3 pages) presented at the EuroHaptics Conference, Lille, France, June 2024 (misc)

ei

Stimper, V.

Advancing Normalising Flows to Model Boltzmann Distributions

University of Cambridge, UK, Cambridge, June 2024, (Cambridge-Tübingen-Fellowship-Program) (phdthesis)

hi

zwe-rob

Rokhmanova, N., Martus, J., Faulkner, R., Fiene, J., Kuchenbecker, K. J.

GaitGuide: A Wearable Device for Vibrotactile Motion Guidance

Workshop paper (3 pages) presented at the ICRA Workshop on Advancing Wearable Devices and Applications Through Novel Design, Sensing, Actuation, and AI, Yokohama, Japan, May 2024 (misc)

hi

Sundaram, V. H., Smith, L., Turin, Z., Rentschler, M. E., Welker, C. G.

Three-Dimensional Surface Reconstruction of a Soft System via Distributed Magnetic Sensing

Workshop paper (3 pages) presented at the ICRA Workshop on Advancing Wearable Devices and Applications Through Novel Design, Sensing, Actuation, and AI, Yokohama, Japan, May 2024 (misc)

hi

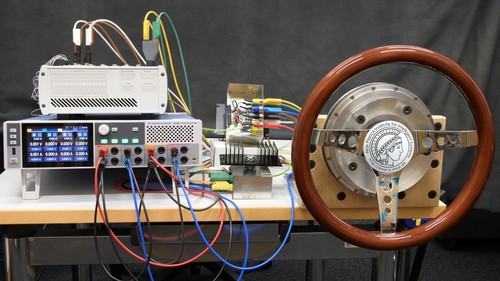

Javot, B., Nguyen, V. H., Ballardini, G., Kuchenbecker, K. J.

CAPT Motor: A Strong Direct-Drive Rotary Haptic Interface

Hands-on demonstration presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

hi

Fazlollahi, F., Seifi, H., Ballardini, G., Taghizadeh, Z., Schulz, A., MacLean, K. E., Kuchenbecker, K. J.

Quantifying Haptic Quality: External Measurements Match Expert Assessments of Stiffness Rendering Across Devices

Work-in-progress paper (2 pages) presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

hi

rm

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Multimodal Haptic Feedback

Extended abstract (1 page) presented at the IEEE RoboSoft Workshop on Multimodal Soft Robots for Multifunctional Manipulation, Locomotion, and Human-Machine Interaction, San Diego, USA, April 2024 (misc)

hi

rm

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic Wearable Devices for Expressive and Salient Haptic Feedback

Hands-on demonstration presented at the IEEE Haptics Symposium, Long Beach, USA, April 2024 (misc)

hi

rm

Sanchez-Tamayo, N., Yoder, Z., Ballardini, G., Rothemund, P., Keplinger, C., Kuchenbecker, K. J.

Demonstration: Cutaneous Electrohydraulic (CUTE) Wearable Devices for Expressive and Salient Haptic Feedback

Hands-on demonstration presented at the IEEE RoboSoft Conference, San Diego, USA, April 2024 (misc)

ei

Rahaman, N., Weiss, M., Wüthrich, M., Bengio, Y., Li, E., Pal, C., Schölkopf, B.

Language Models Can Reduce Asymmetry in Information Markets

arXiv:2403.14443, March 2024, Published as: Redesigning Information Markets in the Era of Language Models, Conference on Language Modeling (COLM) (techreport)

ei

von Kügelgen, J.

Identifiable Causal Representation Learning

University of Cambridge, UK, Cambridge, February 2024, (Cambridge-Tübingen-Fellowship) (phdthesis)

hi

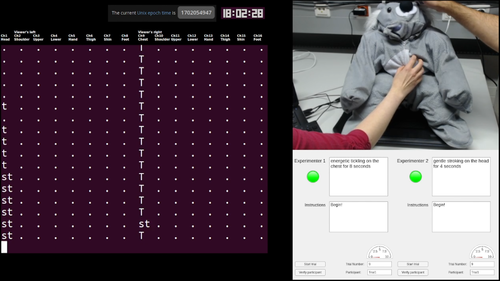

Burns, R.

Creating a Haptic Empathetic Robot Animal That Feels Touch and Emotion

University of Tübingen, Tübingen, Germany, February 2024, Department of Computer Science (phdthesis)

hi

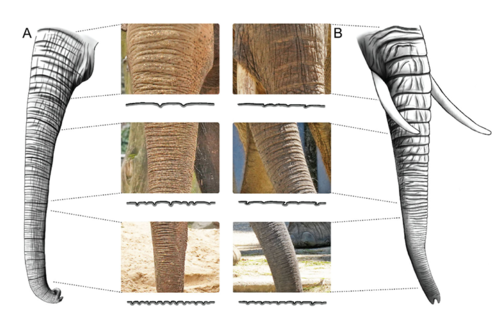

Kaufmann, L., Schulz, A., Reveyaz, N., Ritter, C., Hildebrandt, T., Brecht, M.

Elephants develop wrinkles through both form and function

Society of Integrative and Comparative Biology, Seattle, USA, January 2024 (misc) Accepted

hi

Schulz, A., Serhat, G., Kuchenbecker, K. J.

Adapting a High-Fidelity Simulation of Human Skin for Comparative Touch Sensing in the Elephant Trunk

Abstract presented at the Society for Integrative and Comparative Biology Annual Meeting (SICB), Seattle, USA, January 2024 (misc)

hi

Singal, K., Schulz, A., Dimitriyev, M., Matsumoto, E.

Simplifying the Wrinkled Complexity of Elephant Trunks using Knitted Biomimicry

Society of Integrative and Comparative Biology, Seattle, USA, January 2024 (misc) Accepted

hi

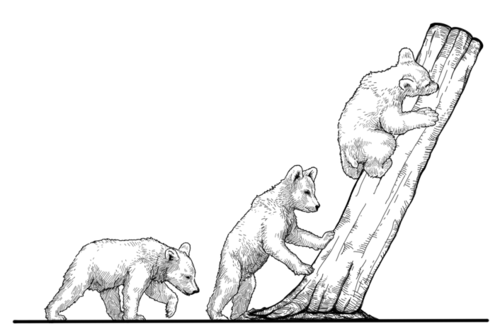

Shriver, C., Schulz, A., Scott, D., Elgart, J., Mendelson, J., Hu, D., Chang, Y.

Defining Mammalian Climbing Gaits and their influence criteria including morphology and mechanics

Society of Integrative and Comparative Biology, Seattle, USA, January 2024 (misc) Accepted

hi

Sordilla, S., Schulz, A., Hu, D., Higgins, C.

Collagen entanglement in elephant skin gives way to strain-stiffening mechanisms

Society of Integrative and Comparative Biology, Seattle, USA, January 2024 (misc) Accepted

hi

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

MPI-10: Haptic-Auditory Measurements from Tool-Surface Interactions

Dataset published as a companion to the journal article "Robust Surface Recognition with the Maximum Mean Discrepancy: Degrading Haptic-Auditory Signals through Bandwidth and Noise" in IEEE Transactions on Haptics, January 2024 (misc)

zwe-csfm

hi

Schulz, A., Kaufmann, L., Brecht, M., Richter, G., Kuchenbecker, K. J.

Whiskers That Don’t Whisk: Unique Structure From the Absence of Actuation in Elephant Whiskers

Abstract presented at the Society for Integrative and Comparative Biology Annual Meeting (SICB), Seattle, USA, January 2024 (misc)

ei

Bonse, M. J., Gebhard, T. D., Dannert, F. A., Absil, O., Cantalloube, F., Christiaens, V., Cugno, G., Garvin, E. O., Hayoz, J., Kasper, M., Matthews, E., Schölkopf, B., Quanz, S. P.

Use the 4S (Signal-Safe Speckle Subtraction): Explainable Machine Learning reveals the Giant Exoplanet AF Lep b in High-Contrast Imaging Data from 2011

2024 (misc) Submitted

ev

Achterhold, J., Guttikonda, S., Kreber, J. U., Li, H., Stueckler, J.

Learning a Terrain- and Robot-Aware Dynamics Model for Autonomous Mobile Robot Navigation

CoRR abs/2409.11452, 2024, Preprint submitted to Robotics and Autonomous Systems Journal. https://arxiv.org/abs/2409.11452 (techreport) Submitted

lds

Eberhard, O., Vernade, C., Muehlebach, M.

A Pontryagin Perspective on Reinforcement Learning

Max Planck Institute for Intelligent Systems, 2024 (techreport)

ps

Müller, L.

Self- and Interpersonal Contact in 3D Human Mesh Reconstruction

University of Tübingen, Tübingen, 2024 (phdthesis)

lds

Er, D., Trimpe, S., Muehlebach, M.

Distributed Event-Based Learning via ADMM

Max Planck Institute for Intelligent Systems, 2024 (techreport)

ps

Petrovich, M.

Natural Language Control for 3D Human Motion Synthesis

LIGM, Ecole des Ponts, Univ Gustave Eiffel, CNRS, 2024 (phdthesis)

ev

Baumeister, F., Mack, L., Stueckler, J.

Incremental Few-Shot Adaptation for Non-Prehensile Object Manipulation using Parallelizable Physics Simulators

CoRR abs/2409.13228, CoRR, 2024, Submitted to IEEE International Conference on Robotics and Automation (ICRA) 2025 (techreport) Submitted

hi

Landin, N., Romano, J. M., McMahan, W., Kuchenbecker, K. J.

Discrete Fourier Transform Three-to-One (DFT321): Code

MATLAB code of discrete fourier transform three-to-one (DFT321), 2024 (misc)

ei

Rajendran, G., Buchholz, S., Aragam, B., Schölkopf, B., Ravikumar, P.

Learning Interpretable Concepts: Unifying Causal Representation Learning and Foundation Models

2024 (misc)

2023

sf

Barocas, S., Hardt, M., Narayanan, A.

Fairness in Machine Learning: Limitations and Opportunities

MIT Press, December 2023 (book)

hi

Mohan, M.

Gesture-Based Nonverbal Interaction for Exercise Robots

University of Tübingen, Tübingen, Germany, October 2023, Department of Computer Science (phdthesis)

hi

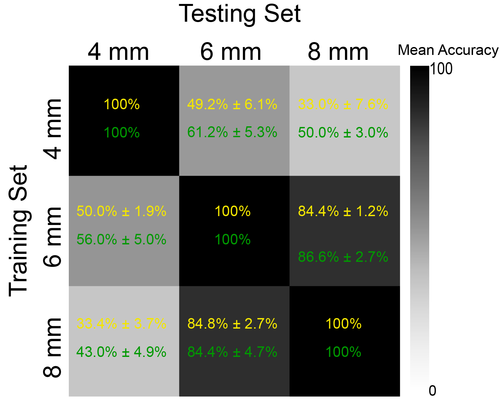

Khojasteh, B., Shao, Y., Kuchenbecker, K. J.

Seeking Causal, Invariant, Structures with Kernel Mean Embeddings in Haptic-Auditory Data from Tool-Surface Interaction

Workshop paper (4 pages) presented at the IROS Workshop on Causality for Robotics: Answering the Question of Why, Detroit, USA, October 2023 (misc)

hi

Garrofé, G., Schoeffmann, C., Zangl, H., Kuchenbecker, K. J., Lee, H.

NearContact: Accurate Human Detection using Tomographic Proximity and Contact Sensing with Cross-Modal Attention

Extended abstract (4 pages) presented at the International Workshop on Human-Friendly Robotics (HFR), Munich, Germany, September 2023 (misc)

hi

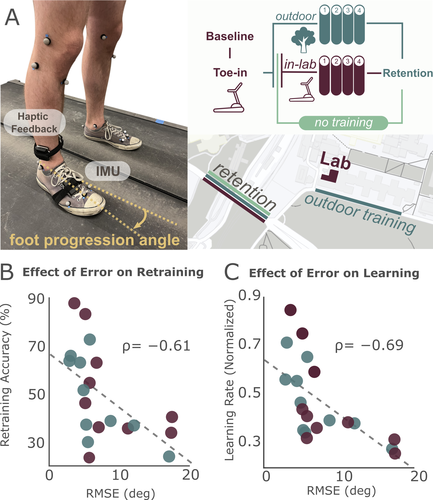

Rokhmanova, N., Pearl, O., Kuchenbecker, K. J., Halilaj, E.

The Role of Kinematics Estimation Accuracy in Learning with Wearable Haptics

Abstract (1 page) presented at the American Society of Biomechanics Annual Meeting (ASB), Knoxville, USA, August 2023 (misc)

hi

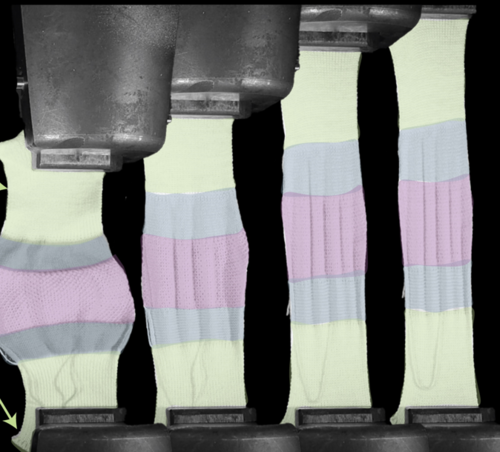

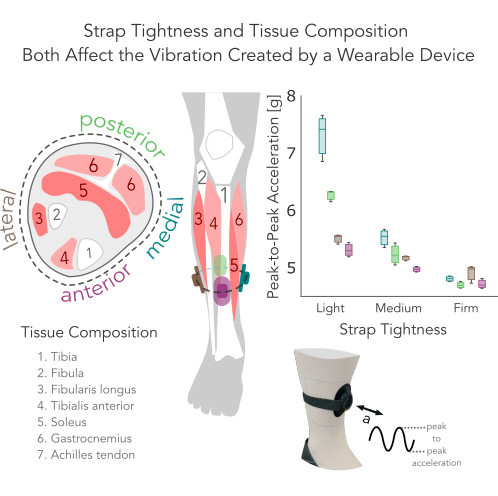

Rokhmanova, N., Faulkner, R., Martus, J., Fiene, J., Kuchenbecker, K. J.

Strap Tightness and Tissue Composition Both Affect the Vibration Created by a Wearable Device

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

hi

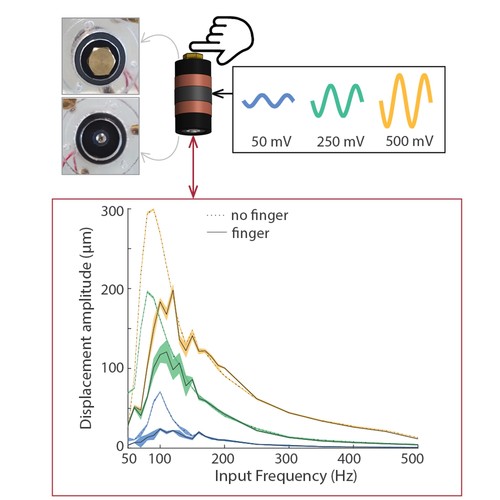

Ballardini, G., Kuchenbecker, K. J.

Toward a Device for Reliable Evaluation of Vibrotactile Perception

Work-in-progress paper (1 page) presented at the IEEE World Haptics Conference (WHC), Delft, The Netherlands, July 2023 (misc)

hi

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test: Code

Code published as a companion to the journal article "Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test" in IEEE Transactions on Automation Science and Engineering, July 2023 (misc)