2024

ps

Sanyal, S.

Leveraging Unpaired Data for the Creation of Controllable Digital Humans

Max Planck Institute for Intelligent Systems and Eberhard Karls Universität Tübingen, September 2024 (phdthesis) To be published

ps

Osman, A. A. A.

Realistic Digital Human Characters: Challenges, Models and Algorithms

University of Tübingen, September 2024 (phdthesis)

ei

Immer, A.

Advances in Probabilistic Methods for Deep Learning

ETH Zurich, Switzerland, September 2024, CLS PhD Program (phdthesis)

hi

Gong, Y.

Engineering and Evaluating Naturalistic Vibrotactile Feedback for Telerobotic Assembly

University of Stuttgart, Stuttgart, Germany, August 2024, Faculty of Design, Production Engineering and Automotive Engineering (phdthesis)

ei

Park, J.

A Measure-Theoretic Axiomatisation of Causality and Kernel Regression

University of Tübingen, Germany, July 2024 (phdthesis)

ei

Sajjadi, S. M. M.

Enhancement and Evaluation of Deep Generative Networks with Applications in Super-Resolution and Image Generation

University of Tübingen, Germany, July 2024 (phdthesis)

ps

Taheri, O.

Modelling Dynamic 3D Human-Object Interactions: From Capture to Synthesis

University of Tübingen, July 2024 (phdthesis) To be published

ei

Stimper, V.

Advancing Normalising Flows to Model Boltzmann Distributions

University of Cambridge, UK, Cambridge, June 2024, (Cambridge-Tübingen-Fellowship-Program) (phdthesis)

ei

von Kügelgen, J.

Identifiable Causal Representation Learning

University of Cambridge, UK, Cambridge, February 2024, (Cambridge-Tübingen-Fellowship) (phdthesis)

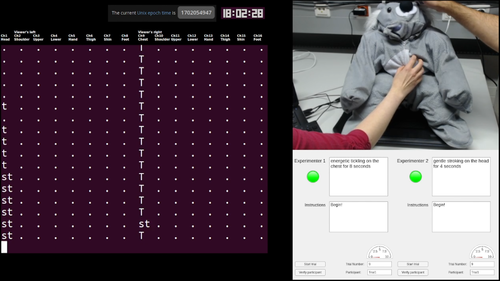

hi

Burns, R.

Creating a Haptic Empathetic Robot Animal That Feels Touch and Emotion

University of Tübingen, Tübingen, Germany, February 2024, Department of Computer Science (phdthesis)

ps

Müller, L.

Self- and Interpersonal Contact in 3D Human Mesh Reconstruction

University of Tübingen, Tübingen, 2024 (phdthesis)

ps

Petrovich, M.

Natural Language Control for 3D Human Motion Synthesis

LIGM, Ecole des Ponts, Univ Gustave Eiffel, CNRS, 2024 (phdthesis)

2023

sf

Barocas, S., Hardt, M., Narayanan, A.

Fairness in Machine Learning: Limitations and Opportunities

MIT Press, December 2023 (book)

hi

Mohan, M.

Gesture-Based Nonverbal Interaction for Exercise Robots

University of Tübingen, Tübingen, Germany, October 2023, Department of Computer Science (phdthesis)

ei

Karimi, A.

Advances in Algorithmic Recourse: Ensuring Causal Consistency, Fairness, & Robustness

ETH Zurich, Switzerland, July 2023 (phdthesis)

ei

Kübler, J. M.

Learning and Testing Powerful Hypotheses

University of Tübingen, Germany, July 2023 (phdthesis)

ei

Gresele, L.

Learning Identifiable Representations: Independent Influences and Multiple Views

University of Tübingen, Germany, June 2023 (phdthesis)

ei

Paulus, M.

Learning with and for discrete optimization

ETH Zurich, Switzerland, May 2023, CLS PhD Program (phdthesis)

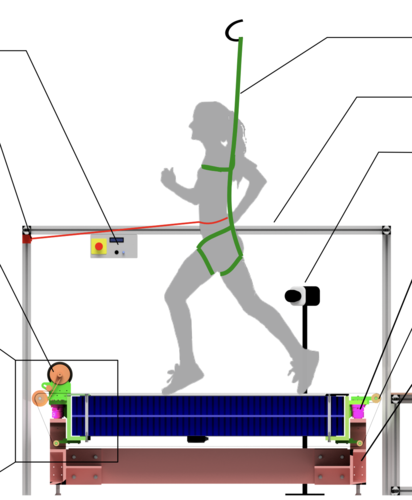

dlg

Sarvestani, A., Ruppert, F., Badri-Spröwitz, A.

An Open-Source Modular Treadmill for Dynamic Force Measurement with Load Dependant Range Adjustment

2023 (unpublished) Submitted

ei

Jin, Z., Mihalcea, R.

Natural Language Processing for Policymaking

In Handbook of Computational Social Science for Policy, pages: 141-162, 7, (Editors: Bertoni, E. and Fontana, M. and Gabrielli, L. and Signorelli, S. and Vespe, M.), Springer International Publishing, 2023 (inbook)

ev

Strecke, M. F.

Object-Level Dynamic Scene Reconstruction With Physical Plausibility From RGB-D Images

Eberhard Karls Universität Tübingen, Tübingen, 2023 (phdthesis)

mms

Baluktsian, M.

Wave front shaping with zone plates: Fabrication and characterization of lenses for soft x-ray applications from standard to singular optics

Universität Stuttgart, Stuttgart (und Verlag Dr. Hut, München), 2023 (phdthesis)

mms

Bubeck, C.

Tailored perovskite-type oxynitride semiconductors and oxides with advanced physical properties

Technische Universität Darmstadt, Darmstadt, 2023 (phdthesis)

mms

Schulz, Frank Martin Ernst

Static and dynamic investigation of magnonic systems: materials, applications and modeling

Universität Stuttgart, Stuttgart, 2023 (phdthesis)

2022

ei

Biester, L., Demszky, D., Jin, Z., Sachan, M., Tetreault, J., Wilson, S., Xiao, L., Zhao, J.

Proceedings of the Second Workshop on NLP for Positive Impact (NLP4PI)

Association for Computational Linguistics, December 2022 (proceedings)

hi

Richardson, B.

Multi-Timescale Representation Learning of Human and Robot Haptic Interactions

University of Stuttgart, Stuttgart, Germany, December 2022, Faculty of Computer Science, Electrical Engineering and Information Technology (phdthesis)

ps

Choutas, V.

Reconstructing Expressive 3D Humans from RGB Images

ETH Zurich, Max Planck Institute for Intelligent Systems and ETH Zurich, December 2022 (phdthesis)

dlg

Sarvestani, L. A.

Mechanical Design, Development and Testing of Bioinspired Legged Robots for Dynamic Locomotion

Eberhard Karls Universität Tübingen, Tübingen , November 2022 (phdthesis)

ei

Neitz, A.

Towards learning mechanistic models at the right level of abstraction

University of Tübingen, Germany, November 2022 (phdthesis)

pf

Qiu, T., Jeong, M., Goyal, R., Kadiri, V., Sachs, J., Fischer, P.

Magnetic Micro-/Nanopropellers for Biomedicine

In Field-Driven Micro and Nanorobots for Biology and Medicine, pages: 389-410, 16, (Editors: Sun, Y. and Wang, X. and Yu, J.), Springer, Cham, 2022 (inbook)

re

Lieder, F., Prentice, M.

Life Improvement Science

In Encyclopedia of Quality of Life and Well-Being Research, Springer, November 2022 (inbook)

ei

Lu, C.

Learning Causal Representations for Generalization and Adaptation in Supervised, Imitation, and Reinforcement Learning

University of Cambridge, UK, Cambridge, October 2022, (Cambridge-Tübingen-Fellowship) (phdthesis)

hi

Nam, S.

Understanding the Influence of Moisture on Fingerpad-Surface Interactions

University of Tübingen, Tübingen, Germany, October 2022, Department of Computer Science (phdthesis)

ei

Wenk, P.

Learning Time-Continuous Dynamics Models with Gaussian-Process-Based Gradient Matching

ETH Zurich, Switzerland, October 2022, CLS PhD Program (phdthesis)

ei

Tabibian, B.

Methods for Minimizing the Spread of Misinformation on the Web

University of Tübingen, Germany, September 2022 (phdthesis)

sf

Hardt, M., Recht, B.

Patterns, Predictions, and Actions: Foundations of Machine Learning

Princeton University Press, August 2022 (book)

ei

Huang, B.

Learning and Using Causal Knowledge: A Further Step Towards a Higher-Level Intelligence

Carnegie Mellon University, Pittsburgh, USA, July 2022 (phdthesis)

ei

Huang, B.

Learning and Using Causal Knowledge: A Further Step Towards a Higher-Level Intelligence

Carnegie Mellon University, July 2022, external supervision (phdthesis)

ei

Schölkopf, B., Uhler, C., Zhang, K.

Proceedings of the First Conference on Causal Learning and Reasoning (CLeaR 2022)

177, Proceedings of Machine Learning Research, PMLR, April 2022 (proceedings)

ei

Ialongo, A.

Variational Inference in Dynamical Systems

University of Cambridge, UK, Cambridge, February 2022, (Cambridge-Tübingen-Fellowship) (phdthesis)

mms

Groß, F.

Entwicklung von Methoden und Bausteinen zur Realisierung Komplexer Magnonischer Systeme

Universität Stuttgart, Stuttgart (und Cuvillier Verlag, Göttingen), 2022 (phdthesis)

al

Sun, H.

Machine-Learning-Driven Haptic Sensor Design

University of Tuebingen, Library, 2022 (phdthesis)

ei

Peters, J., Bauer, S., Pfister, N.

Causal Models for Dynamical Systems

In Probabilistic and Causal Inference: The Works of Judea Pearl, pages: 671-690, 1, Association for Computing Machinery, 2022 (inbook)

mms

Dogan, G.

Deposition and characterization of multi-functional, complex thin films using atomic layer deposition for copper corrosion protection

Universität Stuttgart, Stuttgart, 2022 (phdthesis)

ei

plg

Karimi, A. H., von Kügelgen, J., Schölkopf, B., Valera, I.

Towards Causal Algorithmic Recourse

In xxAI - Beyond Explainable AI: International Workshop, Held in Conjunction with ICML 2020, July 18, 2020, Vienna, Austria, Revised and Extended Papers, pages: 139-166, (Editors: Holzinger, Andreas and Goebel, Randy and Fong, Ruth and Moon, Taesup and Müller, Klaus-Robert and Samek, Wojciech), Springer International Publishing, 2022 (inbook)

pi

Wang, T.

Advanced Miniature Soft Robotic Systems

ETH Zürich , Zürich, 2022 (phdthesis)

ei

Salewski, L., Koepke, A. S., Lensch, H. P. A., Akata, Z.

CLEVR-X: A Visual Reasoning Dataset for Natural Language Explanations

In xxAI - Beyond Explainable AI: International Workshop, Held in Conjunction with ICML 2020, July 18, 2020, Vienna, Austria, Revised and Extended Papers, pages: 69-88, (Editors: Holzinger, Andreas and Goebel, Randy and Fong, Ruth and Moon, Taesup and Müller, Klaus-Robert and Samek, Wojciech), Springer International Publishing, 2022 (inbook)

ei

Schölkopf, B.

Causality for Machine Learning

In Probabilistic and Causal Inference: The Works of Judea Pearl, pages: 765-804, 1, Association for Computing Machinery, New York, NY, USA, 2022 (inbook)

2021

ics

Doerr, A.

Models for Data-Efficient Reinforcement Learning on Real-World Applications

University of Stuttgart, Stuttgart, October 2021 (phdthesis)

ei

Mehrjou, A.

Dynamics of Learning and Learning of Dynamics

ETH Zürich, Zürich, October 2021 (phdthesis)