2025

ps

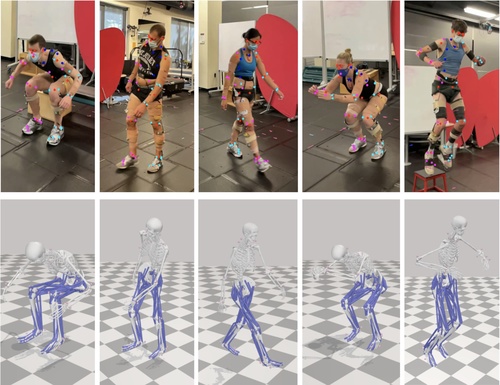

Gozlan, Y., Falisse, A., Uhlrich, S., Gatti, A., Black, M., Chaudhari, A.

OpenCapBench: A Benchmark to Bridge Pose Estimation and Biomechanics

In IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) , February 2025 (inproceedings)

2024

sf

Salaudeen, O., Hardt, M.

ImageNot: A Contrast with ImageNet Preserves Model Rankings

arXiv preprint arXiv:2404.02112, 2024 (conference) Submitted

sf

Nastl, V. Y., Hardt, M.

Predictors from Causal Features Do Not Generalize Better to New Domains

arXiv preprint arXiv:2402.09891, 2024 (conference) Submitted

sf

Mendler-Dünner, C., Carovano, G., Hardt, M.

An Engine Not a Camera: Measuring Performative Power of Online Search

arXiv preprint arXiv:2405.19073, 2024 (conference) Submitted

ps

Athanasiou, N., Cseke, A., Diomataris, M., Wen, M. J. B., Varol, G.

MotionFix: Text-Driven 3D Human Motion Editing

In SIGGRAPH Asia 2024 Conference Proceedings, ACM, December 2024 (inproceedings) To be published

sf

Dominguez-Olmedo, R., Hardt, M., Mendler-Dünner, C.

Questioning the Survey Responses of Large Language Models

arXiv preprint arXiv:2306.07951, 2024 (conference) Submitted

sf

Dominguez-Olmedo, R., Dorner, F. E., Hardt, M.

Training on the Test Task Confounds Evaluation and Emergence

arXiv preprint arXiv:2407.07890, 2024 (conference) Submitted

hi

Mohan, M., Kuchenbecker, K. J.

Demonstration: OCRA - A Kinematic Retargeting Algorithm for Expressive Whole-Arm Teleoperation

Hands-on demonstration presented at the Conference on Robot Learning (CoRL), Munich, Germany, November 2024 (misc) Accepted

al

hi

ei

Andrussow, I., Sun, H., Martius, G., Kuchenbecker, K. J.

Demonstration: Minsight - A Soft Vision-Based Tactile Sensor for Robotic Fingertips

Hands-on demonstration presented at the Conference on Robot Learning (CoRL), Munich, Germany, November 2024 (misc) Accepted

hi

Bartels, J. U., Sanchez-Tamayo, N., Sedlmair, M., Kuchenbecker, K. J.

Active Haptic Feedback for a Virtual Wrist-Anchored User Interface

Hands-on demonstration presented at the ACM Symposium on User Interface Software and Technology (UIST), Pittsburgh, USA, October 2024 (misc) Accepted

ncs

ps

Ostrek, M., Thies, J.

Stable Video Portraits

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, European Conference on Computer Vision (ECCV 2024), October 2024 (inproceedings) Accepted

ps

Dakri, A., Arora, V., Challier, L., Keller, M., Black, M. J., Pujades, S.

On predicting 3D bone locations inside the human body

In 26th International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), October 2024 (inproceedings)

ncs

ps

Ostrek, M., O’Sullivan, C., Black, M., Thies, J.

Synthesizing Environment-Specific People in Photographs

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, European Conference on Computer Vision (ECCV 2024), October 2024 (inproceedings) Accepted

ps

Tripathi, S., Taheri, O., Lassner, C., Black, M. J., Holden, D., Stoll, C.

HUMOS: Human Motion Model Conditioned on Body Shape

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, October 2024 (inproceedings)

ps

Zhang, H., Christen, S., Fan, Z., Hilliges, O., Song, J.

GraspXL: Generating Grasping Motions for Diverse Objects at Scale

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, September 2024 (inproceedings) Accepted

hi

rm

Sanchez-Tamayo, N., Yoder, Z., Rothemund, P., Ballardini, G., Keplinger, C., Kuchenbecker, K. J.

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Advanced Science, (2402461):1-14, September 2024 (article)

rm

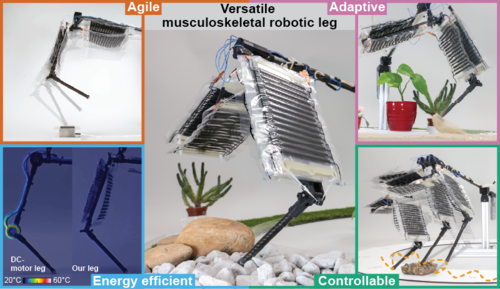

Buchner, T. J. K., Fukushima, T., Kazemipour, A., Gravert, S., Prairie, M., Romanescu, P., Arm, P., Zhang, Y., Wang, X., Zhang, S. L., Walter, J., Keplinger, C., Katzschmann, R. K.

Electrohydraulic Musculoskeletal Robotic Leg for Agile, Adaptive, yet Energy-Efficient Locomotion

Nature Communications, 15(1), September 2024 (article)

ei

Schumacher, P., Krause, L., Schneider, J., Büchler, D., Martius, G., Haeufle, D.

Learning to Control Emulated Muscles in Real Robots: Towards Exploiting Bio-Inspired Actuator Morphology

In 10th International Conference on Biomedical Robotics and Biomechatronics (BioRob), September 2024 (inproceedings) Accepted

ps

Fan, Z., Ohkawa, T., Yang, L., Lin, N., Zhou, Z., Zhou, S., Liang, J., Gao, Z., Zhang, X., Zhang, X., Li, F., Zheng, L., Lu, F., Zeid, K. A., Leibe, B., On, J., Baek, S., Prakash, A., Gupta, S., He, K., Sato, Y., Hilliges, O., Chang, H. J., Yao, A.

Benchmarks and Challenges in Pose Estimation for Egocentric Hand Interactions with Objects

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, September 2024 (inproceedings) Accepted

ps

Zuffi, S., Black, M. J.

AWOL: Analysis WithOut synthesis using Language

In European Conference on Computer Vision (ECCV 2024), LNCS, Springer Cham, September 2024 (inproceedings)

hi

Rokhmanova, N., Martus, J., Faulkner, R., Fiene, J., Kuchenbecker, K. J.

Modeling Shank Tissue Properties and Quantifying Body Composition with a Wearable Actuator-Accelerometer Set

Extended abstract (1 page) presented at the American Society of Biomechanics Annual Meeting (ASB), Madison, USA, August 2024 (misc)

ei

Schneider, F., Kamal, O., Jin, Z., Schölkopf, B.

Moûsai: Efficient Text-to-Music Diffusion Models

Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL), Volume 1: Long Papers, pages: 8050-8068, (Editors: Lun-Wei Ku and Andre Martins and Vivek Srikumar), Association for Computational Linguistics, August 2024 (conference)

ei

Wu*, W., Chen*, W., Zhang, C., Woodland, P. C.

Modelling Variability in Human Annotator Simulation

Findings of the Association for Computational Linguistics (ACL), pages: 1139-1157, (Editors: Ku, Lun-Wei and Martins, Andre and Srikumar, Vivek), Association for Computational Linguistics, August 2024, *equal contribution (conference)

ei

Ortu*, F., Jin*, Z., Doimo, D., Sachan, M., Cazzaniga, A., Schölkopf, B.

Competition of Mechanisms: Tracing How Language Models Handle Facts and Counterfactuals

Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL) , Volume 1, Long Papers, pages: 8420-8436, (Editors: Lun-Wei Ku and Andre Martins and Vivek Srikumar), Association for Computational Linguistics, August 2024, *equal contribution (conference)

ei

Kumar, I., Jin, Z., Mokhtarian, E., Guo, S., Chen, Y., Kiyavash, N., Sachan, M., Schölkopf, B.

CausalCite: A Causal Formulation of Paper Citations

Findings of the Association for Computational Linguistics (ACL), pages: 8395-8410, (Editors: Ku, Lun-Wei and Martins, Andre and Srikumar, Vivek), Association for Computational Linguistics, August 2024 (conference)

ps

Kulits, P., Feng, H., Liu, W., Abrevaya, V., Black, M. J.

Re-Thinking Inverse Graphics with Large Language Models

Transactions on Machine Learning Research, August 2024 (article)

ei

Chen*, W., Horwood*, J., Heo, J., Hernández-Lobato, J. M.

Leveraging Task Structures for Improved Identifiability in Neural Network Representations

Transactions on Machine Learning Research, August 2024, *equal contribution (article)

hi

Schulz, A., Serhat, G., Kuchenbecker, K. J.

Adapting a High-Fidelity Simulation of Human Skin for Comparative Touch Sensing

Extended abstract (1 page) presented at the American Society of Biomechanics Annual Meeting (ASB), Madison, USA, August 2024 (misc)

ei

Dmitriev, D., Szabó, K., Sanyal, A.

On the Growth of Mistakes in Differentially Private Online Learning: A Lower Bound Perspective

Proceedings of the 37th Annual Conference on Learning Theory (COLT), 247, pages: 1379-1398, Proceedings of Machine Learning Research, (Editors: Agrawal, Shipra and Roth, Aaron), PMLR, July 2024, (talk) (conference)

ei

Buchholz, S., Schölkopf, B.

Robustness of Nonlinear Representation Learning

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 4785-4821, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (conference)

ei

Beck, J., Bosch, N., Deistler, M., Kadhim, K. L., Macke, J. H., Hennig, P., Berens, P.

Diffusion Tempering Improves Parameter Estimation with Probabilistic Integrators for ODEs

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 3305-3326, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (conference)

ei

Schröder, C., Macke, J. H.

Simultaneous identification of models and parameters of scientific simulators

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 43895-43927, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (conference)

al

Urpi, N. A., Bagatella, M., Vlastelica, M., Martius, G.

Causal Action Influence Aware Counterfactual Data Augmentation

In Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 1709-1729, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (inproceedings)

ei

robustml

Reizinger, P., Ujváry, S., Mészáros, A., Kerekes, A., Brendel, W., Huszár, F.

Position: Understanding LLMs Requires More Than Statistical Generalization

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 42365-42390, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (conference)

ei

Chen*, W., Zhang*, M., Paige, B., Hernández-Lobato, J. M., Barber, D.

Diffusive Gibbs Sampling

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 7731-7747, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024, *equal contribution (conference)

ei

Jain, S., Lubana, E. S., Oksuz, K., Joy, T., Torr, P. H. S., Sanyal, A., Dokania, P. K.

What Makes Safety Fine-tuning Methods Safe? A Mechanistic Study

ICML 2024 Workshop on Mechanistic Interpretability (Spotlight), July 2024 (conference)

hi

Khojasteh, B., Solowjow, F., Trimpe, S., Kuchenbecker, K. J.

Multimodal Multi-User Surface Recognition with the Kernel Two-Sample Test

IEEE Transactions on Automation Science and Engineering, 21(3):4432-4447, July 2024 (article)

sf

Shirali, A., Abebe*, R., Hardt*, M.

Allocation Requires Prediction Only if Inequality Is Low

In Proceedings of the 41st International Conference on Machine Learning (ICML), PMLR, July 2024, *equal contribution (inproceedings)

sf

Zhang, G., Hardt, M.

Inherent Trade-Offs between Diversity and Stability in Multi-Task Benchmarks

In Proceedings of the 41st International Conference on Machine Learning (ICML), PMLR, July 2024 (inproceedings)

sf

Dorner, F. E., Hardt, M.

Don’t Label Twice: Quantity Beats Quality when Comparing Binary Classifiers on a Budget

In Proceedings of the 41st International Conference on Machine Learning (ICML), PMLR, July 2024 (inproceedings)

al

Geist, A. R., Frey, J., Zhobro, M., Levina, A., Martius, G.

Learning with 3D rotations, a hitchhiker’s guide to SO(3)

In Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 15331-15350, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (inproceedings)

al

Paulus, A., Martius, G., Musil, V.

LPGD: A General Framework for Backpropagation through Embedded Optimization Layers

In Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 39989-40014, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (inproceedings)

ei

Bouchiat, K., Immer, A., Yèche, H., Rätsch, G., Fortuin, V.

Improving Neural Additive Models with Bayesian Principles

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 4416-4443, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (conference)

ei

Abdolahpourrostam, A., Sanyal, A., Moosavi-Dezfooli, S.

Unveiling CLIP Dynamics: Linear Mode Connectivity and Generalization

ICML 2024 Workshop on Foundation Models in the Wild, July 2024 (conference)

ei

Xu, D., Yao, D., Lachapelle, S., Taslakian, P., von Kügelgen, J., Locatello, F., Magliacane, S.

A Sparsity Principle for Partially Observable Causal Representation Learning

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 55389-55433, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (conference)

ps

Grigorev, A., Becherini, G., Black, M., Hilliges, O., Thomaszewski, B.

ContourCraft: Learning to Resolve Intersections in Neural Multi-Garment Simulations

In ACM SIGGRAPH 2024 Conference Papers, pages: 1-10, SIGGRAPH ’24, Association for Computing Machinery, New York, NY, USA, July 2024 (inproceedings)

sf

Stoica, A., Nastl, V. Y., Hardt, M.

Causal Inference from Competing Treatments

In Proceedings of the 41st International Conference on Machine Learning (ICML), PMLR, July 2024 (inproceedings)

ei

Park, J.

A Measure-Theoretic Axiomatisation of Causality and Kernel Regression

University of Tübingen, Germany, July 2024 (phdthesis)

ei

Kremer, H., Schölkopf, B.

Geometry-Aware Instrumental Variable Regression

Proceedings of the 41st International Conference on Machine Learning (ICML), 235, pages: 25560-25582, Proceedings of Machine Learning Research, (Editors: Salakhutdinov, Ruslan and Kolter, Zico and Heller, Katherine and Weller, Adrian and Oliver, Nuria and Scarlett, Jonathan and Berkenkamp, Felix), PMLR, July 2024 (conference)

ei

Kekić, A., Schölkopf, B., Besserve, M.

Targeted Reduction of Causal Models

40th Conference on Uncertainty in Artificial Intelligence (UAI), July 2024 (conference) To be published