Quantifying the Quality of Haptic Interfaces

Shape-Changing Haptic Interfaces

Generating Clear Vibrotactile Cues with Magnets Embedded in a Soft Finger Sheath

Salient Full-Fingertip Haptic Feedback Enabled by Wearable Electrohydraulic Actuation

Cutaneous Electrohydraulic (CUTE) Wearable Devices for Pleasant Broad-Bandwidth Haptic Cues

Modeling Finger-Touchscreen Contact during Electrovibration

Perception of Ultrasonic Friction Pulses

Vibrotactile Playback for Teaching Sensorimotor Skills in Medical Procedures

CAPT Motor: A Two-Phase Ironless Motor Structure

4D Intraoperative Surgical Perception: Anatomical Shape Reconstruction from Multiple Viewpoints

Visual-Inertial Force Estimation in Robotic Surgery

Enhancing Robotic Surgical Training

AiroTouch: Naturalistic Vibrotactile Feedback for Large-Scale Telerobotic Assembly

Optimization-Based Whole-Arm Teleoperation for Natural Human-Robot Interaction

Finger-Surface Contact Mechanics in Diverse Moisture Conditions

Computational Modeling of Finger-Surface Contact

Perceptual Integration of Contact Force Components During Tactile Stimulation

Dynamic Models and Wearable Tactile Devices for the Fingertips

Novel Designs and Rendering Algorithms for Fingertip Haptic Devices

Dimensional Reduction from 3D to 1D for Realistic Vibration Rendering

Prendo: Analyzing Human Grasping Strategies for Visually Occluded Objects

Learning Upper-Limb Exercises from Demonstrations

Minimally Invasive Surgical Training with Multimodal Feedback and Automatic Skill Evaluation

Efficient Large-Area Tactile Sensing for Robot Skin

Haptic Feedback and Autonomous Reflexes for Upper-limb Prostheses

Gait Retraining

Modeling Hand Deformations During Contact

Intraoperative AR Assistance for Robot-Assisted Minimally Invasive Surgery

Immersive VR for Phantom Limb Pain

Visual and Haptic Perception of Real Surfaces

Haptipedia

Gait Propulsion Trainer

TouchTable: A Musical Interface with Haptic Feedback for DJs

Exercise Games with Baxter

Intuitive Social-Physical Robots for Exercise

How Should Robots Hug?

Hierarchical Structure for Learning from Demonstration

Fabrication of HuggieBot 2.0: A More Huggable Robot

Learning Haptic Adjectives from Tactile Data

Feeling With Your Eyes: Visual-Haptic Surface Interaction

S-BAN

General Tactile Sensor Model

Insight: a Haptic Sensor Powered by Vision and Machine Learning

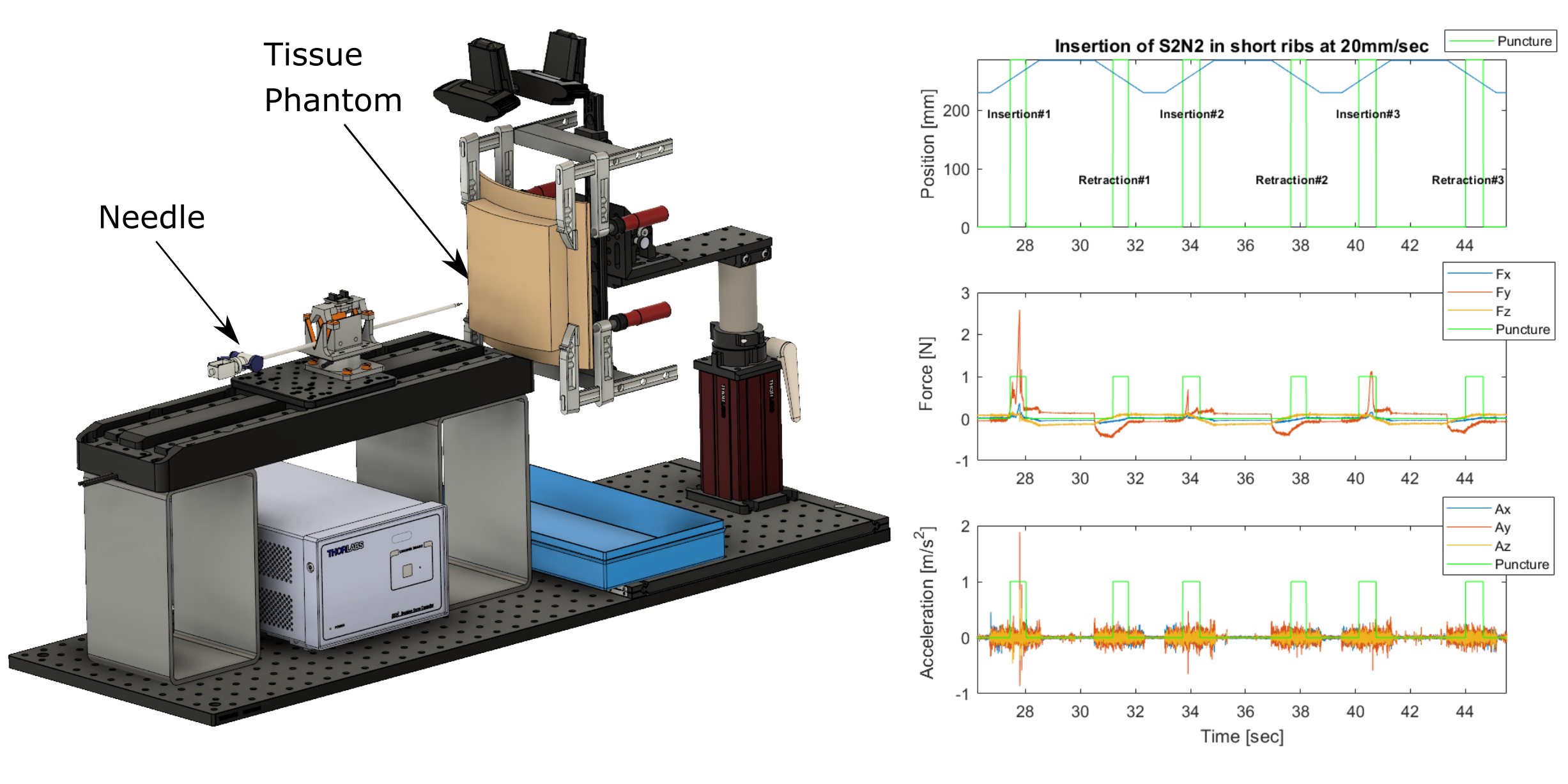

Instrumented Needle Insertion Robot

Human soft-tissue properties vary widely: factors such as the patient's age and health can make enormous differences. As a result, junior medical professionals often have difficulty learning how much force is needed to cut through or puncture a particular type of tissue.

For example, during chest tube insertion, the doctor must use a needle to access the pleural space of the patient's body, puncturing through the pleural membrane but going no further. Surgeons typically perform these procedures blind, relying on a combination of anatomical knowledge and their sense of touch to guide the needle. Surgeons report feeling pleural punctures strongly in some patients, but not in others. Thus, even well trained surgeons may have difficulty, being limited by the resolution of their own human sensory organs.

Given these widely varying tissue properties, complications can occur for percutaneous subtasks within lifesaving procedures like chest-tube insertion. These complications occur more often with inexperienced medical professionals. An area of particular concern is that manually-inserted needles unintentionally perforate vital organs such as the lungs, spleen, and even the heart.

The development of realistic robotic training devices would allow surgeons to learn this difficult technique before their first real procedure. Augmenting their sense of touch via sensorized needles, or using such a needle to warn them of impending punctures, also has the potential to improve surgical outcomes.

In this research we measure tissue properties during needle insertions and will use this data to develop a predictive software model of needle-tissue interactions. We started by developing a custom needle-insertion device (NID0); preliminary experiments with it indicate that our force sensor is of sufficient quality, and we are currently in the process of developing predictive software models based on the captured data.

Ultimately our techniques should allow the development of robotic surgical-training devices that will react realistically (unlike current simulators and tissue phantoms), as well as sensorized needles that could augment a surgeon's sense of touch during a real insertion.

Members

Publications